12. RNN (part a)

We are finally ready to talk about Recurrent Neural Networks (or RNNs), where we will be opening the doors to new content!

14 RNN A V4 Final

RNNs are based on the same principles as those behind FFNNs, which is why we spent so much time reminding ourselves of the feedforward and backpropagation steps that are used in the training phase.

There are two main differences between FFNNs and RNNs. The Recurrent Neural Network uses:

- sequences as inputs in the training phase, and

- memory elements

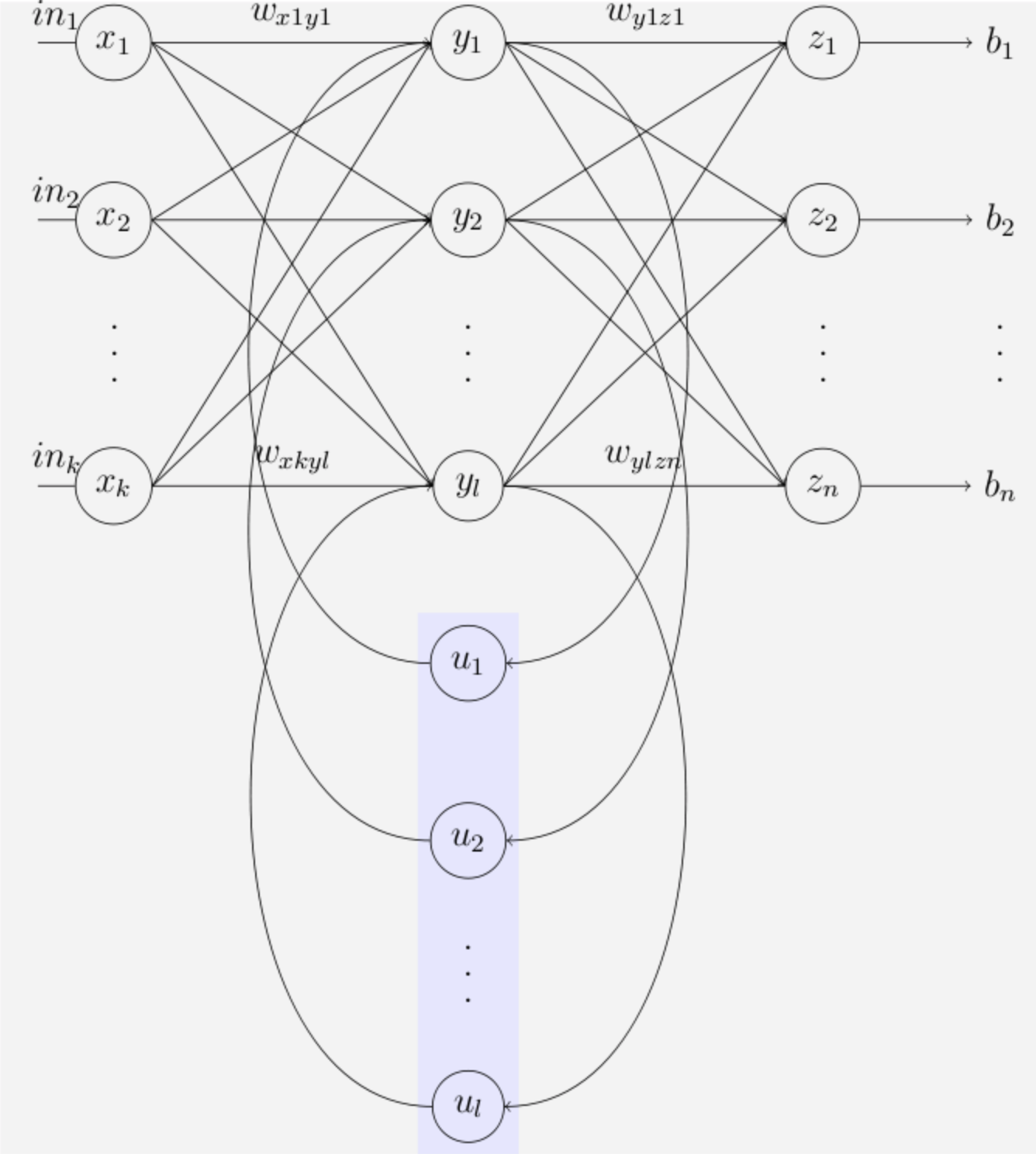

Memory is defined as the output of hidden layer neurons, which will serve as additional input to the network during next training step.

The basic three layer neural network with feedback that serve as memory inputs is called the Elman Network and is depicted in the following picture:

Elman Network, source: Wikipedia

As mentioned in the History concept,

here is the original Elman Network publication from 1990. This link is provided here as it's a significant milestone in the world on RNNs. To simplify things a bit, you can take a look at the following additional info.

Let's continue now to the next video with more information about RNNs.